Getting Started with AWS and Terraform: 01 - Creating an EC2 Instance

Introduction

In this series of blog posts, we will build an enterprise scale web infrastructure.

Starting small with a simple virtual machine image, iterating as our needs develop until eventually we will have a highly available, secure, and resilient web infrastructure capable of meeting the needs of tens of customers!

All of this will be managed with the popular Infrastructure As Code tool, Terraform.

In this first post, we will:

- Configure Terraform with a pre-existing AWS account

- Deploy an EC2 instance to AWS

- Allow incoming connections to the EC2 instance from our local device

- SSH into our EC2 instance using a ssh key pair

Prerequisites

It would be helpful for you to have some familiarity with the following concepts/tools:

- What Infrastructure as Code (IaC) is and some IaC tools (e.g. Terraform, Pulumi, CloudFormation)

- What a cloud service provider is (e.g. AWS, Google Cloud, Azure)

- Familiarity with using the terminal

Before we start building, you’ll want to have setup a couple things:

- Have an AWS Account setup

- Installed Terraform cli

- An IDE or text editor of your preference (I use Neovim, btw)

Step 0 - Create a directory for your project

As we’ll be building our infrastructure using Terraform, we’ll need somewhere to write this code.

Let’s create a directory called my-unicorn-project and inside that directory create another directory called infra.

Step 1 - Define our Terraform provider

Now we will create our main.tf file inside our infra directory where we will define our cloud provider.

# /infra/main.tf

terraform {

required_version = ">= 1.2.0"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 5.41.0"

}

}

}

Open a terminal in the infra directory and run terraform init. Terraform will download the required provider modules so they can be used to configure our services.

Step 2 - Create an AWS EC2 instance

Now we have successfully initialized Terraform with the AWS provider we can define AWS resources in our code. In this step we will create three things:

- An AWS EC2 instance resource

- An AWS Amazon Machine Image (ami) data reference for EC2 to run

- A Terraform output to return the public ip address for the created EC2 instance so we can easily connect to it

Create a new file called unicorn-api.tf.

Inside the file, add:

# /infra/unicorn-api.tf

data "aws_ami" "amazon_x86_image" {

most_recent = true

owners = ["amazon"]

name_regex = "^al2023-ami-ecs.*"

filter {

name = "architecure"

values = ["x86_64"]

}

}

resource "aws_instance" "unicorn_vm" {

ami = data.aws_ami.amazon_x86_image.id

instance_type = "t2.micro"

tags = {

Name = "Unicorn"

}

}

output "unicorn_vm_public_ip" {

description = "Public IP address of the unicorn vm"

value = aws_instance.unicorn_vm.public_ip

}

In this file We’ve defined an AWS EC2 resource and attached an AWS AMI data source with an x86 architecture and has docker pre-installed on the image to install onto the EC2 instance.

We’ve also defined an output which returns the EC2 instance’s public ip address. We’ll need this later.

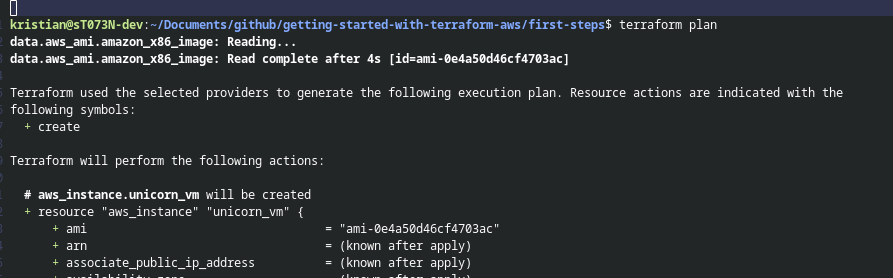

Now when you open the terminal and run terraform plan to review the proposed changes prior to applying them.

It fails! But why?

Even though we’ve created an AWS account, and downloaded Terraform, and the AWS provider for Terraform to use, we still need Terraform to authenticate with AWS in order to create these resources.

Step 2.1 - Create an AWS API Key for Terraform

In order for Terraform to manage resources in AWS it needs access to your AWS account. We can accomplish this by creating credentials specifically for this project and configuring Terraform to use those credentials to create and manage resources.

Create a new file called aws-provider-key.tf and add the following content:

# /infra/aws-provider-key.tf

provider "aws" {

access_key = ""

secret_key = ""

region = "ap-southeast-2"

}

You can select a region closer to you. As I’m in Australia I’m opting to use the Australian region. See here to find out what other regions AWS have available and pick the one closest to you.

Next you need to create two things in the AWS Management Console:

- Create a new User with administrator permission

- Create an access key for the user

Note, giving users more access than they require (such as admin access) is not good practice. Users should only ever be given the mininum level of access in order to complete a task. I’ll leave it as an exercise to the reader to find out the minimum required roles should be for this user. For more information about the principle of least privilege, see here.

Take the access key and corresponding secret key and add them to your provider key you defined earlier.

These API keys should be treated like credentials. Do not commit this file to source control. Otherwise the key could be discovered and you’ll need to replace them.

Now try running terraform plan once more. Your output should look something like this:

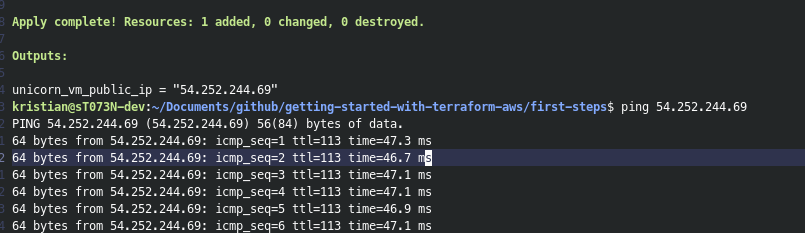

Now run terraform apply to deploy this EC2 resource to AWS.

Step 3 - Connect to our EC2 Instance

If you’ve made it this far it means you’ve successfully configured Terraform with an AWS account and have deployed an EC2 instance to AWS. Nice!

Let’s try connect to this instance.

You can see the public ip address of the as one of the outputs from the apply step. Alternatively, you can also run terraform output to print all the outputs from the project.

Copy the IP address and in a terminal run ping <the-ip-address>.

No response…

This is because when we define the EC2 instance, a default security group is applied to it. This security group allows incoming connections on any port using any protocol but only from other AWS resources within the same security group…

If we want to connect to our instance, we need to make our own rules.

We don’t want just anyone to connect to this service so for now we’ll only allow our local device’s public ip address in the address range. You can find this easily using a site like whatismyipaddress.com.

Open unicon-api.tf and add the following resources, providing your public ip for cidr_ipv4 value:

# /infra/unicorn-api.tf

resource "aws_security_group" "unicorn_api_security_group" {

name = "unicorn-api-security-group"

}

resource "aws_vpc_security_group_ingress_rule" "allow_from_local_device" {

security_group_id = aws_security_group.unicorn_api_security_group.id

description = "allow ingress on any port from any ip"

cidr_ipv4 = "<YOUR_IP_ADDRESS_HERE>/32"

ip_protocol = -1

}

This rule allows an incoming connection on any port using any protocol but only from the defined ip address range.

Next, apply the security group to the EC2 instance, adding the following property to the EC2 instance:

# /infra/unicorn-api.tf

resource "aws_instance" "unicorn_vm" {

# ...

vpc_security_group_ids = [aws_security_group.unicorn_api_security_group.id]

}

With these new resources defined, we can re-apply our changes with terraform apply. This will rebuild the EC2 instance, so we’ll need to get the latest ip address to ping it.

Once the resources have been updated and the latest IP address copied, we can now ping our EC2 instance with:

ping <the-ip-address>

Step 4 - SSH into the EC2 Instance

Before we move on it’s good practice when experimenting with Terraform and public cloud resources to not leave those resources active otherwise you might run up a surprising bill.

What we’ve created so far isn’t so expensive but to get used to the habit let’s quickly destroy these resources by running terraform destroy.

In this step we’re going to create an ssh key pair using Terraform. The public key will be added to the EC2 instance as one of its known ssh public keys.

We’ll save the private key as a local file so we can reference it when we try connect to the EC2 instance.

First, add the following providers to our main.tf:

# infra/main.tf

required_providers {

# ...

tls = {

source = "hashicorp/tls"

version = "~> 4.0.4"

}

local = {

source = "hashicorp/local"

version = "2.4.1"

}

}

Above we’re adding two new providers to our Terraform config:

- tls, which allows us to create TLS private keys and certificates

- local, which allows us to manage local resources (such as creating new files) via Terraform

Since we’ve added new providers, we need to run terraform init to download their modules.

Create a new file named ssh_key.tf and add the following:

# infra/ssh_key.tf

# create a tls key pair using rsa algorithm

resource "tls_private_key" "ssh_key" {

algorithm = "RSA"

rsa_bits = 4096

}

# save the private key to a local file named "key.pem"

resource "local_file" "rsa_key" {

content = tls_private_key.ssh_key.private_key_openssh

filename = "${path.module}/key.pem"

file_permission = "0600"

}

# define an AWS key pair, providing the public key from the tls key pair defined above

resource "aws_key_pair" "key_pair_for_ec2_instance" {

key_name = "ssh-key"

public_key = tls_private_key.ssh_key.public_key_openssh

}

Above we’ve just:

- Created a tls private key resource

- Created a local file with the file name

key.pemand added the ssh private key to its contents - An AWS key pair resource which will register the ssh public key to our aws EC2 instance

Now, add the key pair to our EC2 instance and while we’re at it, lets add the instance’s public dns name as an output for when we ssh into it:

# infra/unicorn-api.tf

resource "aws_instance" "web_service_vm" {

# ...

key_name = aws_key_pair.key_pair_for_ec2_instance.key_name

}

output "unicorn_vm_public_dns_name" {

description = "Public DNS name of the unicorn vm"

value = aws_instance.unicorn_vm.public_dns

}

Note: As the name suggests, the tls private key should be treated like any other credentials and not be committed to version control. It should also be worth noting that when creating a private key in Terraform the key is store unencrypted in the Terraform state backup file. With this in mind, for this exercise I would suggest ignoring both

key.pemandterraform.tfstate.backupfrom your version control. For production workloads, the key pair can be generated outside of Terraform, and only the public key shared.

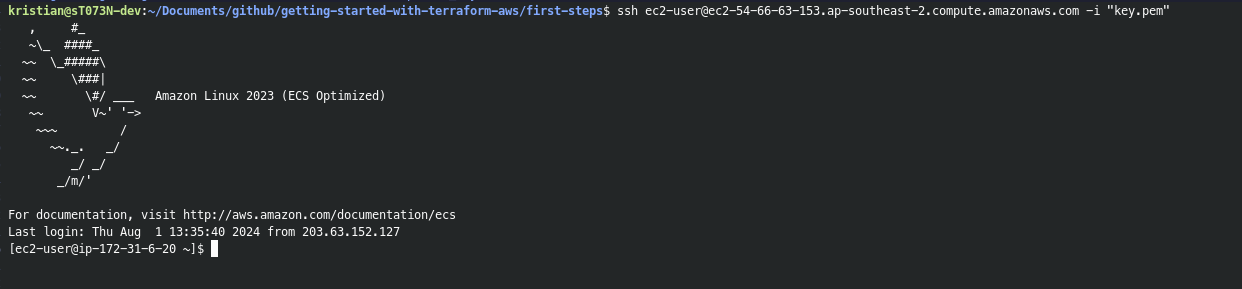

Now run terraform apply, copy the dns name output value and then in a console run:

ssh ec2-user@<EC2-DNS-NAME> -i 'key.pem'.

And voila! You’ve successfully:

And voila! You’ve successfully:

- Deployed a virtual machine instance to a public cloud environment

- Confiured network security rules on the instance to accept incoming connections from only your device

- Configured an ssh key pair for the instance and ssh’d onto the instance

- Done it all in Terraform so these steps can be easily repeated with just a single command!

Now again run terraform destroy so you don’t wake up in a month’s time with a shocking bill from AWS!

In the next post we will update our EC2 instance to build and run a web server that can be accessed from any web browser.

See here for the final code output from this post.

Bonus Step!

In order to both ping and ssh into our EC2 instance we created a custom security rule that allowed any kind of connection from our public ip address to the EC2 instance.

However, in most cases we don’t want our rules to be this lose (remember the principle of least privilege). How might you change your security group rule to only allow ssh connections to the instance?